Spark Release 2.1.0

- Welcome to Apache Axis2/Java. Apache Axis2™ is a Web Services / SOAP / WSDL engine, the successor to the widely used Apache Axis SOAP stack. There are two implementations of the Apache Axis2 Web services engine - Apache Axis2/Java and Apache Axis2/C.

- Apache responses with http/1.0 even if request is http/1.1. Ask Question Asked 6 years ago. Active 4 years, 11 months ago. Viewed 3k times 1. I configured Apache Http server version 2.4 as proxy for backend server (Glassfish App Server actually). Configuration is mostly default.

Apache Spark 2.1.0 is the second release on the 2.x line. This release makes significant strides in the production readiness of Structured Streaming, with added support for event time watermarks and Kafka 0.10 support. In addition, this release focuses more on usability, stability, and polish, resolving over 1200 tickets.

To download Apache Spark 2.1.0, visit the downloads page. You can consult JIRA for the detailed changes. We have curated a list of high level changes here, grouped by major modules.

Apache Hadoop Ozone. Ozone is a scalable, redundant, and distributed object store for Hadoop. Apart from scaling to billions of objects of varying sizes, Ozone can function effectively in containerized environments like Kubernetes. Applications like Apache Spark, Hive and YARN, work without any modifications when using Ozone.

Core and Spark SQL

- API updates

- SPARK-17864: Data type APIs are stable APIs.

- SPARK-18351: from_json and to_json for parsing JSON for string columns

- SPARK-16700: When creating a DataFrame in PySpark, Python dictionaries can be used as values of a StructType.

- Performance and stability

- SPARK-17861: Scalable Partition Handling. Hive metastore stores all table partition metadata by default for Spark tables stored with Hive’s storage formats as well as tables stored with Spark’s native formats. This change reduces first query latency over partitioned tables and allows for the use of DDL commands to manipulate partitions for tables stored with Spark’s native formats. Users can migrate tables stored with Spark’s native formats created by previous versions by using the MSCK command.

- SPARK-16523: Speeds up group-by aggregate performance by adding a fast aggregation cache that is backed by a row-based hashmap.

- Other notable changes

- SPARK-9876: parquet-mr upgraded to 1.8.1

Programming guides: Spark Programming Guide and Spark SQL, DataFrames and Datasets Guide.

Structured Streaming

- API updates

- SPARK-17346: Kafka 0.10 support in Structured Streaming

- SPARK-17731: Metrics for Structured Streaming

- SPARK-17829: Stable format for offset log

- SPARK-18124: Observed delay based Event Time Watermarks

- SPARK-18192: Support all file formats in structured streaming

- SPARK-18516: Separate instantaneous state from progress performance statistics

- Stability

- SPARK-17267: Long running structured streaming requirements

Programming guide: Structured Streaming Programming Guide.

MLlib

- API updates

- SPARK-5992: Locality Sensitive Hashing

- SPARK-7159: Multiclass Logistic Regression in DataFrame-based API

- SPARK-16000: ML persistence: Make model loading backwards-compatible with Spark 1.x with saved models using spark.mllib.linalg.Vector columns in DataFrame-based API

- Performance and stability

- SPARK-17748: Faster, more stable LinearRegression for < 4096 features

- SPARK-16719: RandomForest: communicate fewer trees on each iteration

Programming guide: Machine Learning Library (MLlib) Guide.

SparkR

The main focus of SparkR in the 2.1.0 release was adding extensive support for ML algorithms, which include:

- New ML algorithms in SparkR including LDA, Gaussian Mixture Models, ALS, Random Forest, Gradient Boosted Trees, and more

- Support for multinomial logistic regression providing similar functionality as the glmnet R package

- Enable installing third party packages on workers using

spark.addFile(SPARK-17577). - Standalone installable package built with the Apache Spark release. We will be submitting this to CRAN soon.

Programming guide: SparkR (R on Spark).

GraphX

- SPARK-11496: Personalized pagerank

Programming guide: GraphX Programming Guide.

Deprecations

- MLlib

- SPARK-18592: Deprecate unnecessary Param setter methods in tree and ensemble models

Changes of behavior

- Core and SQL

- SPARK-18360: The default table path of tables in the default database will be under the location of the default database instead of always depending on the warehouse location setting.

- SPARK-18377: spark.sql.warehouse.dir is a static configuration now. Users need to set it before the start of the first SparkSession and its value is shared by sessions in the same application.

- SPARK-14393: Values generated by non-deterministic functions will not change after coalesce or union.

- SPARK-18076: Fix default Locale used in DateFormat, NumberFormat to Locale.US

- SPARK-16216: CSV and JSON data sources write timestamp and date values in ISO 8601 formatted string. Two options, timestampFormat and dateFormat, are added to these two data sources to let users control the format of timestamp and date value in string representation, respectively. Please refer to the API doc of DataFrameReader and DataFrameWriter for more details about these two configurations.

- SPARK-17427: Function SIZE returns -1 when its input parameter is null.

- SPARK-16498: LazyBinaryColumnarSerDe is fixed as the the SerDe for RCFile.

- SPARK-16552: If a user does not specify the schema to a table and relies on schema inference, the inferred schema will be stored in the metastore. The schema will be not inferred again when this table is used.

- Structured Streaming

- SPARK-18516: Separate instantaneous state from progress performance statistics

- MLlib

- SPARK-17870: ChiSquareSelector now accounts for degrees of freedom by using pValue rather than raw statistic to select the top features.

Known Issues

- SPARK-17647: In SQL LIKE clause, wildcard characters ‘%’ and ‘_’ right after backslashes are always escaped.

- SPARK-18908: If a StreamExecution fails to start, users need to check stderr for the error.

Credits

Last but not least, this release would not have been possible without the following contributors:ALeksander Eskilson, Aaditya Ramesh, Adam Roberts, Adrian Petrescu, Ahmed Mahran, Alex Bozarth, Alexander Shorin, Alexander Ulanov, Andrew Duffy, Andrew Mills, Andrew Ray, Angus Gerry, Anthony Truchet, Anton Okolnychyi, Artur Sukhenko, Bartek Wisniewski, Bijay Pathak, Bill Chambers, Bjarne Fruergaard, Brian Cho, Bryan Cutler, Burak Yavuz, Cen Yu Hai, Charles Allen, Cheng Lian, Chie Hayashida, Christian Kadner, Clark Fitzgerald, Cody Koeninger, Daniel Darabos, Daoyuan Wang, David Navas, Davies Liu, Denny Lee, Devaraj K, Dhruve Ashar, Dilip Biswal, Ding Ding, Dmitriy Sokolov, Dongjoon Hyun, Drew Robb, Ekasit Kijsipongse, Eren Avsarogullari, Ergin Seyfe, Eric Liang, Erik O’Shaughnessy, Eyal Farago, Felix Cheung, Ferdinand Xu, Fred Reiss, Fu Xing, Gabriel Huang, Gaetan Semet, Gang Wu, Gayathri Murali, Gu Huiqin Alice, Guoqiang Li, Gurvinder Singh, Hao Ren, Herman Van Hovell, Hiroshi Inoue, Holden Karau, Hossein Falaki, Huang Zhaowei, Huaxin Gao, Hyukjin Kwon, Imran Rashid, Jacek Laskowski, Jagadeesan A S, Jakob Odersky, Jason White, Jeff Zhang, Jianfei Wang, Jiang Xingbo, Jie Huang, Jie Xiong, Jisoo Kim, John Muller, Jose Hiram Soltren, Joseph K. Bradley, Josh Rosen, Jun Kim, Junyang Qian, Justin Pihony, Kapil Singh, Kay Ousterhout, Kazuaki Ishizaki, Kevin Grealish, Kevin McHale, Kishor Patil, Koert Kuipers, Kousuke Saruta, Krishna Kalyan, Liang Ke, Liang-Chi Hsieh, Lianhui Wang, Linbo Jin, Liwei Lin, Luciano Resende, Maciej Brynski, Maciej Szymkiewicz, Mahmoud Rawas, Manoj Kumar, Marcelo Vanzin, Mariusz Strzelecki, Mark Grover, Maxime Rihouey, Miao Wang, Michael Allman, Michael Armbrust, Michael Gummelt, Michal Senkyr, Michal Wesolowski, Mikael Staldal, Mike Ihbe, Mitesh Patel, Nan Zhu, Nattavut Sutyanyong, Nic Eggert, Nicholas Chammas, Nick Lavers, Nick Pentreath, Nicolas Fraison, Noritaka Sekiyama, Peng Meng, Peng, Meng, Pete Robbins, Peter Ableda, Peter Lee, Philipp Hoffmann, Prashant Sharma, Prince J Wesley, Priyanka Garg, Qian Huang, Qifan Pu, Rajesh Balamohan, Reynold Xin, Robert Kruszewski, Russell Spitzer, Ryan Blue, Saisai Shao, Sameer Agarwal, Sami Jaktholm, Sandeep Purohit, Sandeep Singh, Satendra Kumar, Sean Owen, Sean Zhong, Seth Hendrickson, Sharkd Tu, Shen Hong, Shivansh Srivastava, Shivaram Venkataraman, Shixiong Zhu, Shuai Lin, Shubham Chopra, Sital Kedia, Song Jun, Srinath Shankar, Stavros Kontopoulos, Stefan Schulze, Steve Loughran, Suman Somasundar, Sun Dapeng, Sun Rui, Sunitha Kambhampati, Suresh Thalamati, Susan X. Huynh, Sylvain Zimmer, Takeshi YAMAMURO, Takuya UESHIN, Tao LI, Tao Lin, Tao Wang, Tarun Kumar, Tathagata Das, Tejas Patil, Thomas Graves, Timothy Chen, Timothy Hunter, Tom Graves, Tom Magrino, Tommy YU, Tyson Condie, Uncle Gen, Vinayak Joshi, Vincent Xie, Wang Fei, Wang Lei, Wang Tao, Wayne Zhang, Weichen Xu, Weiluo (David) Ren, Weiqing Yang, Wenchen Fan, Wesley Tang, William Benton, Wojciech Szymanski, Xiangrui Meng, Xianyang Liu, Xiao Li, Xin Ren, Xin Wu, Xing SHI, Xusen Yin, Yadong Qi, Yanbo Liang, Yang Wang, Yangyang Liu, Yin Huai, Yu Peng, Yucai Yu, Yuhao Yang, Yuming Wang, Yun Ni, Yves Raimond, Zhan Zhang, Zheng RuiFeng, Zhenhua Wang, pkch, tone-zhang, yimuxi

Spark News Archive

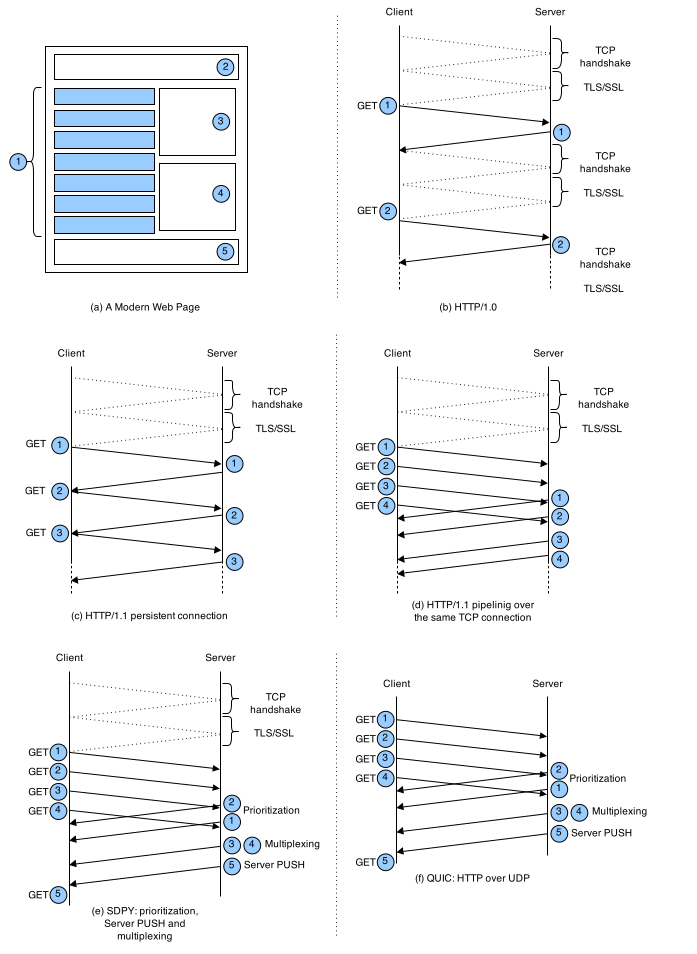

The Hyper-Text Transfer Protocol (HTTP) is perhaps the most significant protocol used on the Internet today. Web services, network-enabled appliances and the growth of network computing continue to expand the role of the HTTP protocol beyond user-driven web browsers, while increasing the number of applications that require HTTP support.

Although the java.net package provides basic functionality for accessing resources via HTTP, it doesn’t provide the full flexibility or functionality needed by many applications. HttpAsyncClient seeks to fill this void by providing an efficient, up-to-date, and feature-rich package implementing the client side of the most recent HTTP standards and recommendations.

Designed for extension while providing robust support for the base HTTP protocol, HttpAsyncClient may be of interest to anyone building HTTP-aware client applications based on asynchronous, event driven I/O model.

Documentation

- Quick Start - contains a simple, complete example of asynchronous request execution.

- HttpAsyncClient Examples - a set of examples demonstrating some of the more complex use scenarios.

- Javadocs

Features

- Standards based, pure Java, implementation of HTTP versions 1.0 and 1.1

- Full implementation of all HTTP methods (GET, POST, PUT, DELETE, HEAD, OPTIONS, and TRACE) in an extensible OO framework.

- Supports encryption with HTTPS (HTTP over SSL) protocol.

- Transparent connections through HTTP proxies.

- Tunneled HTTPS connections through HTTP proxies, via the CONNECT method.

- Connection management support concurrent request execution. Supports setting the maximum total connections as well as the maximum connections per host. Detects and closes expired connections.

- Persistent connections using KeepAlive in HTTP/1.0 and persistence in HTTP/1.1

- The ability to set connection timeouts.

- Source code is freely available under the Apache License.

- Basic, Digest, NTLMv1, NTLMv2, NTLM2 Session, SNPNEGO and Kerberos authentication schemes.

- Plug-in mechanism for custom authentication schemes.

- Automatic Cookie handling for reading Set-Cookie: headers from the server and sending them back out in a

Cookieheader when appropriate. - Plug-in mechanism for custom cookie policies.

- Support for HTTP/1.1 response caching.

- Support for pipelined request execution and processing.

Apache Options * Http/1.0

Standards Compliance

Apache Http 1.0 Support

HttpAsyncClient strives to conform to the following specifications endorsed by the Internet Engineering Task Force (IETF) and the internet at large:

Apache Get / Http/1.0 400

- RFC 1945 Hypertext Transfer Protocol – HTTP/1.0

- RFC 2616 Hypertext Transfer Protocol – HTTP/1.1

- RFC 2617 HTTP Authentication: Basic and Digest Access Authentication

- RFC 2109 HTTP State Management Mechanism (Cookies)

- RFC 2965 HTTP State Management Mechanism (Cookies v2)